Researchers from the AIRI Institute and the Center for Cognitive Modeling of MIPT have created an innovative approach that allows large language models to interact more effectively with three-dimensional space. This was reported by the press service of the AIRI Institute.

Unlike traditional models, which often rely on two-dimensional images or raw data in the form of point clouds, the new method helps AI better understand the relationships between objects. For example, that a chair is near a table and is intended for sitting.

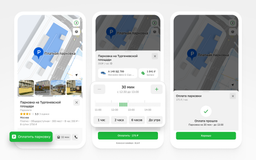

The system, called 3DGraphLLM, takes into account not only the objects themselves, but also their spatial and semantic connections, which is especially important in rooms with many objects. We are talking about kitchens, workshops or offices. The model was trained on well-known datasets with accurate 3D reconstructions of real rooms and text descriptions of objects, using the Vicuna-v1.5 and LLAMA3 language models, which are optimized for robotics.

Testing 3DGraphLLM showed that it surpasses many existing methods in object recognition accuracy, including other approaches with language models. The model demonstrated an improvement in accuracy of more than 7% compared to the baseline solution. It works faster and more economically in terms of resources than the most modern counterparts.

Currently, the team of scientists is working on integrating this method into real robotic systems. The main goal is for robots not only to see objects, but also to understand their relationships, which will allow them to effectively perform user tasks. These skills are key to creating a new generation of service and household robots.

Read also on the topic:

A neuron that reduces computational costs was developed at MIPT

Neural networks for land monitoring were developed in Moscow