The State Duma is preparing to introduce amendments to the articles of the Criminal Code of the Russian Federation ("Defamation", "Theft", "Fraud", "Extortion", "Causing property damage by deception or abuse of trust") related to the use of deepfakes. For more than a year, they have been actively used by cybercriminals, creating fake videos, voice recordings, and images of citizens through neural networks and AI. They are used for extortion and other crimes.

According to Izvestia, whose editors have reviewed the bill, it is proposed to supplement a number of articles of the Criminal Code of the Russian Federation with an additional qualifying characteristic. This is the commission of a crime using an image or voice (including falsified or artificially created) and biometric data. Those found guilty of such crimes will face fines of up to 1.5 million rubles and up to seven years in prison.

The development of computer technologies has led to an expansion of opportunities for creating video and audio materials based on samples of images and voices of people, artificially recreating non-existent events. Modern hardware and software systems, as well as the use of neural networks and artificial intelligence (deepfake, digital mask technologies, etc.) allow creating fakes that are almost impossible for a non-specialist to distinguish from reality.

Currently, there is no precise definition of what a deepfake is in Russian legislation. There is also no responsibility in the form of a fine or criminal liability for their use in criminal purposes in Russian legislation. This gap is proposed to be eliminated, as explained to Izvestia by the author of the bill.

We propose to introduce more serious responsibility under a number of criminal articles for the use of this technology. The fact that it is actively used has already been proven at a meeting with the president at the end of last year on a direct line. There are many examples of people being left with nothing because of forgeries of their voices. Therefore, it is proposed to provide for modern realities in the Criminal Code.

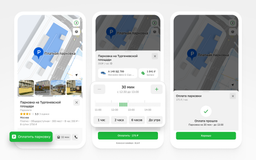

However, Russians themselves, who want to create deepfakes for various purposes, both for illegal actions and for pranks, are already suffering from the desire to hide or replace their voice for various purposes. It was recently revealed that attackers have begun to disguise malicious files as neural network-based applications for changing voices. Such a fake AI application for changing the voice of Russianssteals their personal data and gives fraudsters access to a smartphone or other device.

Read materials on the topic:

AI fraudsters extort money using fabricated voices of SUSU management