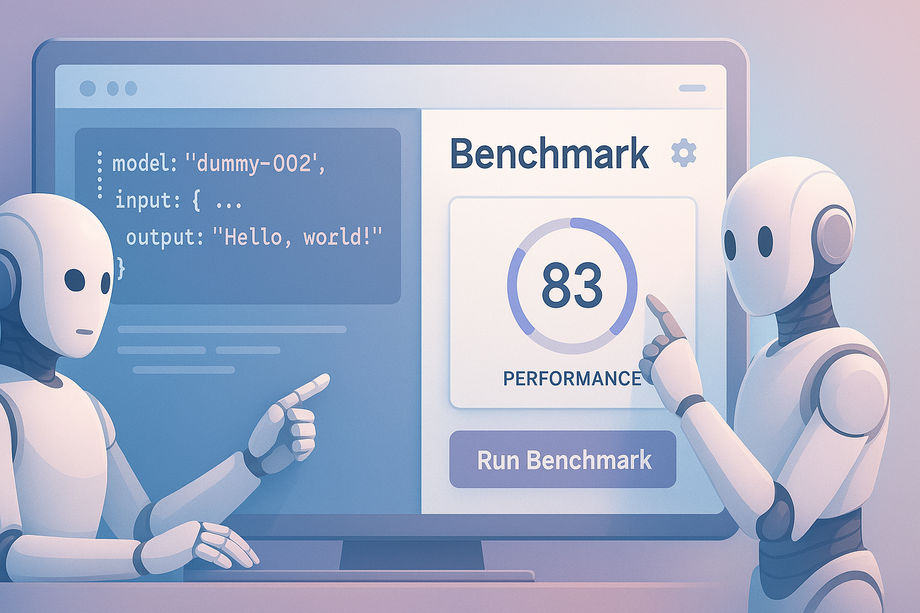

The Alliance in the field of Artificial Intelligence has presented MERA Code - the first open benchmark for testing Russian-language AI models in programming. Developed with the participation of leading technology companies and universities, including Sber, T-Bank, MWS AI (MTS Web Services), Rostelecom, Innopolis University, ITMO, Skoltech, Central University, and "Siberian Neural Networks," the tool is designed to solve the problem of the lack of a unified standard for evaluating AI effectiveness in code generation.

The benchmark offers a transparent methodology for evaluating large language models (LLM), taking into account the specifics of the Russian language. Unlike foreign counterparts, it includes 11 tasks in three formats: text2code (code generation from description), code2text (code documentation), and code2code (optimization and correction). Testing supports 8 programming languages: Python, Java, C#, JavaScript, Go, C, C++ and Scala.

An important difference is the isolated execution environment, where the code is not just analyzed but run, which increases the objectivity of the evaluation. The platform is open to everyone: developers can compare models through a rating system, and researchers can use the framework for their own tests.

MERA Code will help:

- Developers - choose the best AI tools for the job.

- Researchers - compare models under uniform conditions.

- Companies - make decisions based on transparent data.

This is already the second branch of the MERA benchmark after the presentation at AI Journey 2023. In June 2025, the industry version MERA Industrial appeared, and now - a specialized solution for programmers.

Read more on the topic:

Telegram will remain on the Russian market, and WhatsApp may face restrictions - Deputy Gorelkin